I recently started FPGA programming and figured it would be fun to use an FPGA to implement the FizzBuzz algorithm.

An FPGA (Field-Programmable Gate Array) is an interesting chip that you can program to implement arbitrary digital logic.

This lets you build a complex digital circuit without wiring up individual gates and flip flops.

It's like having a custom chip that can be anything from a logic analyzer to a microprocessor to a video generator.

The "FizzBuzz test" is to write a program that prints the numbers from 1 to 100, except multiples of 3 are replaced with the word "Fizz", multiples of 5 with "Buzz" and multiples of both with "FizzBuzz".

Since FizzBuzz can be implemented in a few lines of code, it is used as a programming interview question to weed out people who can't program at all.

![The Mojo FPGA board, connected to a serial-to-USB interface. The big chip on the Mojo is the Spartan 6 FPGA. The Mojo FPGA board, connected to a serial-to-USB interface. The big chip on the Mojo is the Spartan 6 FPGA.]()

The Mojo FPGA board, connected to a serial-to-USB interface. The big chip on the Mojo is the Spartan 6 FPGA.

Implementing FizzBuzz in digital logic (as opposed to code) is rather pointless, but I figured it would be a good way to learn FPGAs.1

For this project, I used the Mojo V3 FPGA development board (shown above), which was designed to be an easy-to-use starter board.

It uses an FPGA chip from Xilinx's Spartan 6 family. Although the Mojo's FPGA is one of the smallest Spartan 6 chips, it still contains over 9000 logic cells and 11,000 flip flops, so it can do a lot.

Implementing serial output on the FPGA

What does it mean to implement FizzBuzz on an FPGA? The general-purpose I/O pins of an FPGA could be connected to anything, so the FizzBuzz output could be displayed in many different ways such as LEDs, seven-segment displays, an LCD panel, or a VGA monitor.

I decided that outputting the text over a serial line to a terminal was the closest in spirit to a "standard" FizzBuzz program.

So the first step was to implement serial output on the FPGA.

The basic idea of serial communication is to send bits over a wire, one at a time.

The RS-232 serial protocol

is a simple protocol for serial data, invented in 1960 for connecting things like teletypes and modems.

The diagram below shows how the character "F" (binary 01000110) would be sent serially over the wire.

First, a start bit (low) is sent to indicate the start of a character.2 Next, the 8 bits of the character are sent in reverse order.

Finally, a stop bit (high) is sent to indicate the end of the character.

The line sits idle (high) between characters until another character is ready to send.

For a baud rate of 9600, each bit is sent for 1/9600 of a second. With 8 data bits, no parity bit, and 1 stop bit, the protocol is known as 8N1. Many different serial protocols are in use, but 9600 8N1 is a very common one.

![Serial line output of the character "F" sent at 9600 baud / 8N1. Serial line output of the character "F" sent at 9600 baud / 8N1.]()

Serial line output of the character "F" sent at 9600 baud / 8N1.

The first step in implementing this serial output was to produce the 1/9600 second intervals for each bit.

This interval can be measured by counting 5208 clock pulses on the Mojo.3

I implemented this by using a 13-bit counter to repeatedly count from 0 to 5207.

To keep track of which bit is being output in each interval, I used a simple state machine that advanced through the start bit, the 8 data bits, and the stop bit.

The state is held in a 4-bit register.

(With FPGAs, you end up dealing a lot with clock pulses, counters, and state machines.)

To create the interval and state registers in the FPGA chip, I wrote code in the Verilog hardware description language.

I won't explain Verilog thoroughly, but hopefully you can get a feel for how it works.

In the code below,

the first lines define a 13-bit register called counter and a 4-bit register called state. The counter is incremented until it reaches 5207, at which time the counter is reset to 0 and state is incremented to process the next output bit.

(Note that <= is an assignment operator, not a comparison.4)

The line always @(posedge clk) indicates that the code is executed on the positive edge of each clock.

reg [12:0] counter;

reg [3:0] state;

always @(posedge clk) begin

if (counter < 5207) begin

counter <= counter + 1;

end else begin

counter <= 0;

state <= state + 1;

end

end

While this may look like code in a normal programming language, it operates entirely differently.

In a normal language, operations usually take place sequentially as the program is executed line by line.

For instance, the processor would check the value of counter. It would then add 1 to counter.

But in Verilog, there's no processor and no program being executed. Instead, the code generates hardware to perform the operations.

For example, an adder circuit is created to increment counter, and a separate adder to increment state, and additional logic for the comparison with 5207.

Unlike the sequential processor, the FPGA does everything in parallel.

For instance, the FPGA does the 5207 comparison, the increment or reset of counter and the increment of state all in parallel on each clock pulse.

Because of this parallelism, FPGAs can be much faster than processors for highly parallel tasks.

The next part of the serial code (below) outputs the appropriate bit for each state.

As before, while this looks like a normal programming language, it is generating hardware circuits, not operations that are executed sequentially.

In this case, the code creates gate logic (essentially a multiplexer) to select the right value for out.

case (state)

IDLE: out = MARK; // high

START: out = SPACE; // low

BIT0: out = char1[0];

BIT1: out = char1[1];

...

BIT6: out = char1[6];

STOP: out = MARK;

default: out = MARK;

endcase

There's a bit more code for the serial module to define constants, initialize the counters, and start and stop each character, but the above code should give you an idea of how Verilog works.

The full serial code is here.

The FizzBuzz Algorithm

The next step is figuring out what to send over the serial line.

How do we convert the numbers from 1 to 100 into ASCII characters?

This is trivial when programming a microprocessor, but hard with digital logic. The problem is that converting a binary number to decimal digits requires division by 10 and 100, and division is very inconvenient to implement with gates.

My solution was to use a binary-coded decimal (BCD) counter, storing each of the three digits separately.

This made the counter slightly more complicated, since each digit needs to wrap at 9, but it made printing the digits easy.

I wrote a BCD counter module (source) to implement the 3-digit counter. It has three 4-bit counters digit2, digit1, and digit0.

The flag increment indicates that the counter should be incremented. Usually just digit0 is incremented. But if digit0 is 9, then it wraps to 0 and digit1 is incremented. Except if digit1 is also 9, then it wraps to 0 and digit2 is incremented. Thus, the digits will count from 000 to 999.

if (increment) begin

if (digit0 != 9) begin

// Regular increment digit 0

digit0 <= digit0 + 1;

end else begin

// Carry from digit 0

digit0 <= 0;

if (digit1 != 9) begin

// Regular increment digit 1

digit1 <= digit1 + 1;

end else begin

// Carry from digit 1

digit1 <= 0;

digit2 <= digit2 + 1;

end

end

end

As before, keep in mind that while this looks like normal program code, it turns into a bunch of logic gates, generating the new values for digit2, digit1 and digit0 on each clock cycle.

The system isn't executing instructions in sequence, so performance isn't limited by the number of instructions but just by the delay for signals to propagate through the gates.

The next challenge was testing if the number was divisible by 3 or 5.

Like division, the modulo operation is easy on a microprocessor, but hard with digital logic.

There's no built-in divide operation, so modulo needs to be computed with a big pile of gates.

Although the IDE can synthesize the gates for a modulo operation, it seemed inelegant.

Instead, I simply kept counters for the value modulo 3 and the value modulo 5. The value modulo 3, for instance, would simply count 0, 1, 2, 0, 1, 2, ...5

The final piece of FizzBuzz was the code to output each line, character by character.

In a program, we could simply call the serial output routine for each character.

But in an FPGA, we need to keep track of which character is being sent, with yet another state machine.

Note that to convert each digit to an ASCII character, binary 11 is concatenated, using the slightly strange syntax 2'b11.

The code excerpt below is slightly simplified; the full code includes leading zero checks so "001" will print as "1".

state <= state + 1; // Different state from serial

if (mod3 == 0 && mod5 != 0) begin

// Fizz

case (state)

1: char <= "F";

2: char <= "i";

3: char <= "z";

4: char <= "z";

5: char <= "\r";

6: begin

char <= "\n";

state <= NEXT; // Done with output line

end

endcase

end else if (mod3 != 0 && mod5 == 0) begin

... Buzz case omitted ...

end else if (mod3 == 0 && mod5 == 0) begin

... Fizzbuzz case omitted ...

end else begin

// No divisors; output the digits of the number.

case (state)

1: char <= {2'b11, digit2[3:0]};

2: char <= {2'b11, digit1[3:0]};

3: char <= {2'b11, digit0[3:0]};

4: char <= "\r";

5: begin

char <= "\n";

state <= NEXT;

end

endcase

end

Putting it all together, there are multiple state machines and counters controlling the final FizzBuzz circuit.

The main state machine controls the code above, moving through the characters of the line.

For each character, this state machine triggers the serial output module, and waits until the character has been output.

Inside the serial module, a state machine moves through each bit of the character. This state machine waits until the baud rate counter has measured out the width of the bit. When the serial output of the character is done, the serial module signals the main state machine.

The main state machine then moves to the next character in the line. When the line is done, the main state machine increments the BCD counter (counting from 1 to 100) and then starts outputting the next line.

Programming languages make it easy to do operations in sequence, perform loops, make subroutine calls and so forth.

But with an FPGA, you need to explicitly control when things happen, using state machines and counters to keep track of what's happening.

In exchange for this, FPGAs give you a huge degree of parallelism and control.

Running FizzBuzz on the FPGA board

To compile the Verilog code, I used Xilinx's ISE tool (below), which is a development environment that lets you write code, simulate it, and synthesize it into gate-level circuitry that can be loaded onto the FPGA.

Using the ISE tool is fairly straightforward, and explained in the Mojo tutorials. The synthesis process was slow compared to a compile, taking about 45 seconds for my FizzBuzz program.

![By writing Verilog code in Xilinx's ISE tool, you can program functionality into an FPGA. By writing Verilog code in Xilinx's ISE tool, you can program functionality into an FPGA.]()

By writing Verilog code in Xilinx's ISE tool, you can program functionality into an FPGA.

Once I had the code working in the simulator,7, I downloaded it to the FPGA board over a USB cable.

I connected the FPGA output pin to a USB-to-serial adapter6 and used a terminal emulator (screen) to display the serial output on my computer. I hit the reset button on the Mojo board and (after just a bit more debugging) the FizzBuzz output appeared (below).

![First page of output from the FizzBuzz FPGA, as displayed by the screen terminal emulator. First page of output from the FizzBuzz FPGA, as displayed by the screen terminal emulator.]()

First page of output from the FizzBuzz FPGA, as displayed by the screen terminal emulator.

The image below shows the raw serial data from the FPGA (yellow).

This is the end result of the FizzBuzz circuitry running on the FPGA board—a sequence of pulses.

The oscilloscope also shows the decoded ASCII characters (green). This data is near the beginning of the FizzBuzz output, showing the lines for 2, 3 and 4. (CR and LF are carriage return and line feed.)

![The serial data signal (yellow) near the beginning of the FizzBuzz output. The ASCII decoding is in green. The serial data signal (yellow) near the beginning of the FizzBuzz output. The ASCII decoding is in green.]()

The serial data signal (yellow) near the beginning of the FizzBuzz output. The ASCII decoding is in green.

What happens inside the FPGA?

You might wonder how a Verilog description of a circuit gets turned into digital logic, and how the FPGA implements this

logic.

The ISE synthesis tool turns the Verilog design into circuitry suitable for implementation inside the FPGA.

It first synthesizes the Verilog code into a "netlist", specifying the logic and connections.

Next it translates the netlists into FPGA primitives, which are mapped onto the capabilities of the particular chip

(the Spartan 6 in my case).

Finally, the place and route process optimizes the layout of the chip, minimizing the distance signals need to travel.

![Schematic of the FizzBuzz circuit. Schematic of the FizzBuzz circuit.]()

Schematic of the FizzBuzz circuit.

The image above shows the schematic of the FizzBuzz circuit, as generated by the synthesis tools.

As you can see, the Verilog code turns into a large tangle of circuitry.

Each block is a flip flop, logic element, multiplexer or other unit.

These blocks make up the counters, state registers and logic for the FizzBuzz circuit.

While this looks like a lot of logic, it used less than 2% of the chip's capability.

A closeup (below) of the schematic shows a flip flop (labeled "fdre")8 and a lookup table (labeled "lut5") from the BCD counter.

The nice thing about Verilog is that you can design the circuit at a high level, and it gets turned into the low-level circuitry. This is called RTL (Register-transfer level) and lets you design

using registers and high-level operations on them, without worrying about the low-level hardware implementation.

For instance, you can simply say count + 1 and this will generate the necessary binary adder circuitry.

![Detail of the schematic showing a flip flop and lookup table. Detail of the schematic showing a flip flop and lookup table.]()

Detail of the schematic showing a flip flop and lookup table.

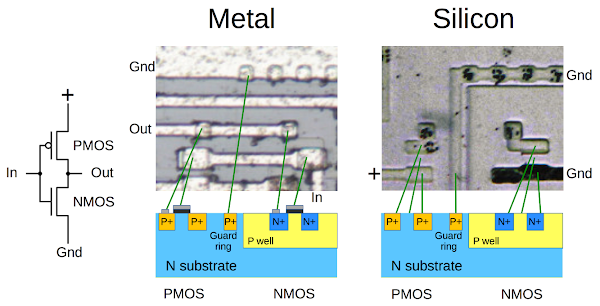

The FPGA chip uses an interesting technique to implement logic equations. Instead of wiring together individual gates, the logic is implemented with a lookup table (LUT), which is

a flexible way of implementing arbitrary logic.

Each lookup table has 6 input lines, so it can implement any combinatorial logic with 6 inputs. With 6 inputs, there are 64 different input combinations, yielding a 64-line truth table. By storing this table as a 64-bit bitmap, the LUT can implement any desired logic function.

For example, part of the logic for the output pin is equivalent to the logic circuit below.

This is implemented by storing the 64-bit value FFFFA8FFFFA8A8A8 into the lookup table.

In the Spartan 6 chip, the LUT is implemented with 64 bits of static RAM, loaded when the FPGA is initialized.

Since the chip has 5720 separate lookup tables, it can be programmed to implement a lot of arbitrary logic.

![The gate logic implemented by one lookup table in the FPGA. The gate logic implemented by one lookup table in the FPGA.]()

The gate logic implemented by one lookup table in the FPGA.

The final piece of the FPGA puzzle is the switch matrix that connects the circuitry together in arbitrary ways.

In the Spartan 6 a handful of LUTs, flip flops and multiplexers are grouped into a configurable logic blocks (CLB).9

The CLBs are connected together by a switch matrix, as shown below.

Each switch matrix block is programmed to connect different wires together, allowing the FPGA to be wired as desired.

An important part of the FPGA synthesis process is positioning blocks to minimize the wiring distance, both to minimize signal propagation delay and to avoid running out of interconnect paths.

![The switching matrix in the Spartan 6 FPGA allows arbitrary interconnections between CLBs. From the User Guide. The switching matrix in the Spartan 6 FPGA allows arbitrary interconnections between CLBs. From the User Guide.]()

The switching matrix in the Spartan 6 FPGA allows arbitrary interconnections between CLBs. From the

User Guide.

Should you try an FPGA?

Personally, I was very reluctant to try out an FPGA because they seemed scary and weird.

While there is a learning curve, FPGAs aren't as difficult as I expected.

If you're interested in new programming paradigms, FPGAs will definitely give you a different perspective.

Things that you take for granted, such as performing operations in sequence, will move to the foreground with an FPGA.

You can experiment with high degrees of parallelism. And FPGAs will give you a better idea of how digital circuits work.

However, I wouldn't recommend trying FPGAs unless you have some familiarity with wiring up LEDs and switches and understand basic digital logic: gates, flip flops, and state machines.

If you're comfortable with an Arduino, though, an FPGA is a reasonable next step.

For most applications, a microcontroller can probably do the job as well as an FPGA and is easier to program.

Unless you have high data rates or require parallelism, an FPGA is probably overkill.

In my case, I found a microcontroller was barely powerful enough for my 3Mb/s Ethernet gateway project, so I'm looking into FPGAs for my next project.

Is the Mojo a good board to start with?

The Mojo FPGA development board is sold by Adafruit and Sparkfun, so I figured it would be a good hacker choice.

The Mojo was designed for people getting started with FPGAs, and I found it worked well in this role.

The makers of the Mojo wrote a solid collection of tutorials using Verilog.10

It was very helpful to use tutorials written for the specific board, since it minimized that amount of time I spent fighting with the board and tools.

The Mojo is programmed over a standard USB cable, which is more convenient than boards that need special JTAG adapters.

![The Mojo FPGA board. The Spartan-6 FPGA chip dominates the board. The Mojo FPGA board. The Spartan-6 FPGA chip dominates the board.]()

The Mojo FPGA board. The Spartan-6 FPGA chip dominates the board.

Although the Mojo has plenty of I/O pins, it doesn't have any I/O devices included except 8 LEDs.

It would be nicer to experiment with a board that includes buttons, 7-segment displays, VGA output, sensors and so forth.

(It's not hard to wire up stuff to the Mojo, but it would be convenient to have them included.)

Also, some development boards include external RAM but the Mojo doesn't, a problem for applications such as a logic analyzer that require a lot of storage.11

(You can extend the Mojo with an IO shield or RAM shield.)

A good introductory book to get started with the Mojo is Programming FPGAs;

it also covers the considerably cheaper Papilo One and Elbert 2 boards.

A list of FPGA development boards is here if you want to look at other options.

Conclusion

An FPGA is an impractical way to implement FizzBuzz, but it was a fun project and I learned a lot about FPGA programming.

I certainly wouldn't get the FPGA job if FizzBuzz was used as an interview question, though!

My code is on github, but keep in mind I'm a beginner to FPGAs.

Follow me on Twitter

or RSS

to find out about my latest blog posts.

Notes and references